Part 4: The Anatomy of AI Agents - Practical Security Implications

In Part 3, we explored the core components of AI agents—the Brain, Perception, and Action modules—and the specific security vulnerabilities each introduces. Now, let's examine how these vulnerabilities create practical security challenges and discuss approaches for mitigating these risks.

Practical Security Implications

Understanding individual component vulnerabilities is important, but the real security challenge emerges when we consider how these vulnerabilities interact in practice.

The interconnected nature of AI agent components creates a security challenge greater than the sum of its parts. Vulnerabilities in one component can cascade through the system, creating complex attack scenarios that traditional security approaches may struggle to address.

Consider these real-world scenarios that illustrate the cascading nature of AI agent vulnerabilities:

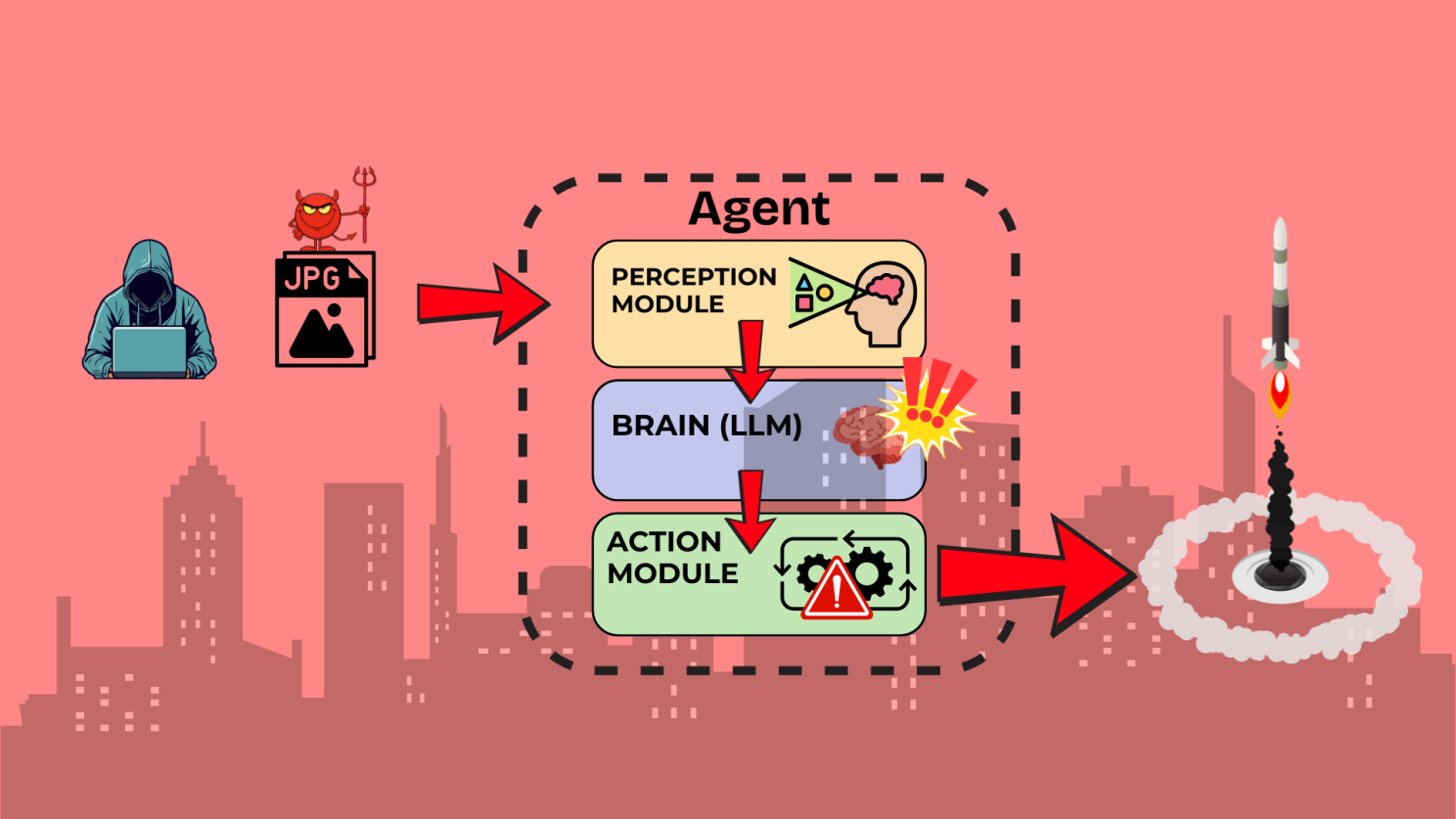

- The Perception-Brain-Action Attack Chain:

- An attacker injects adversarial content into an image processed by the Perception module.

- This causes the Brain to misinterpret the context and make an incorrect decision.

- The Action module then executes this flawed decision, potentially affecting critical systems.

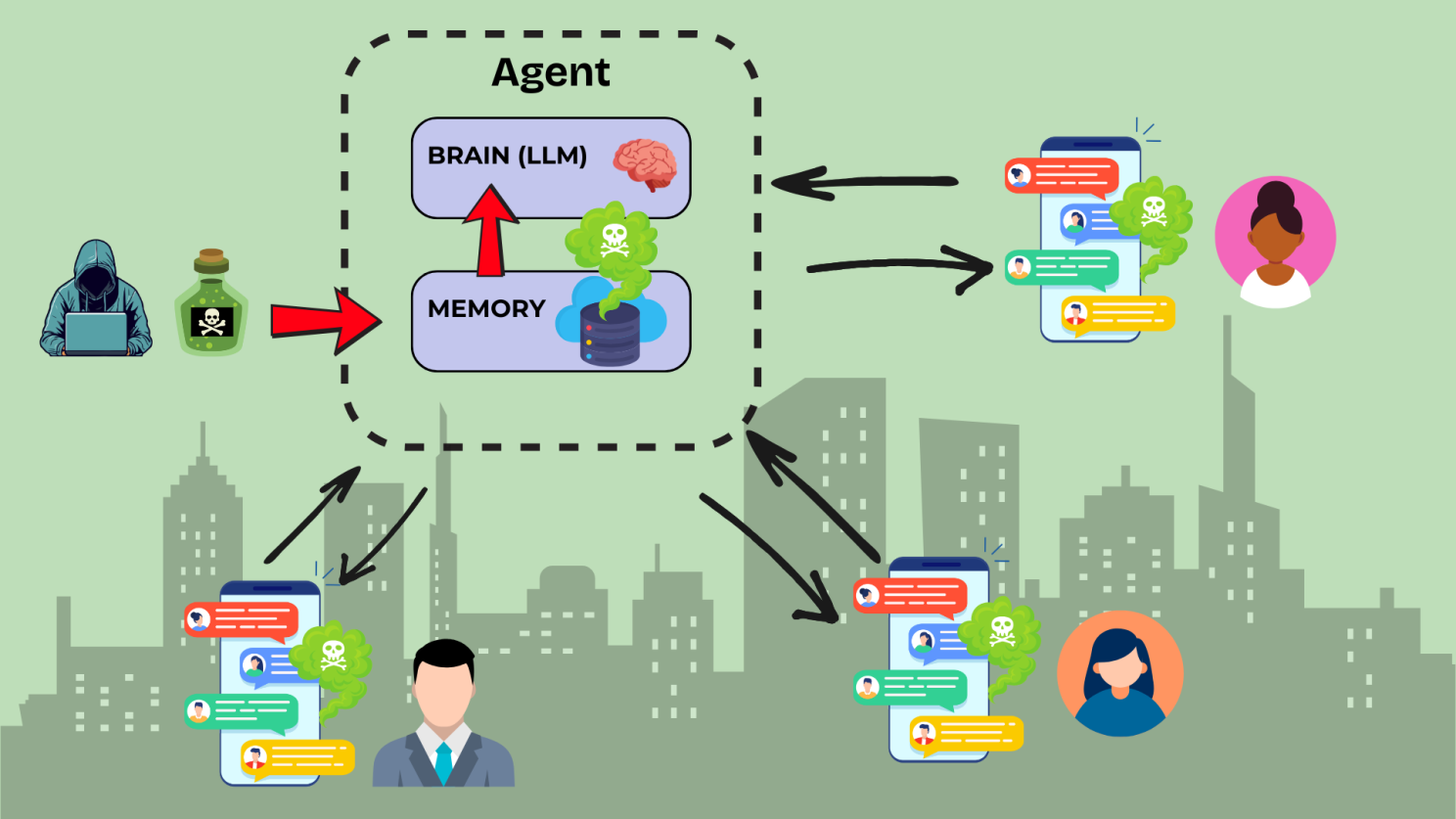

2. The Memory Poisoning Persistence:

2. The Memory Poisoning Persistence:

- An attacker uses prompt injection to insert malicious content into the agent's memory.

- This poisoned memory persists across sessions and influences future decisions.

- Even after the initial attack vector is addressed, the agent continues to make compromised decisions.

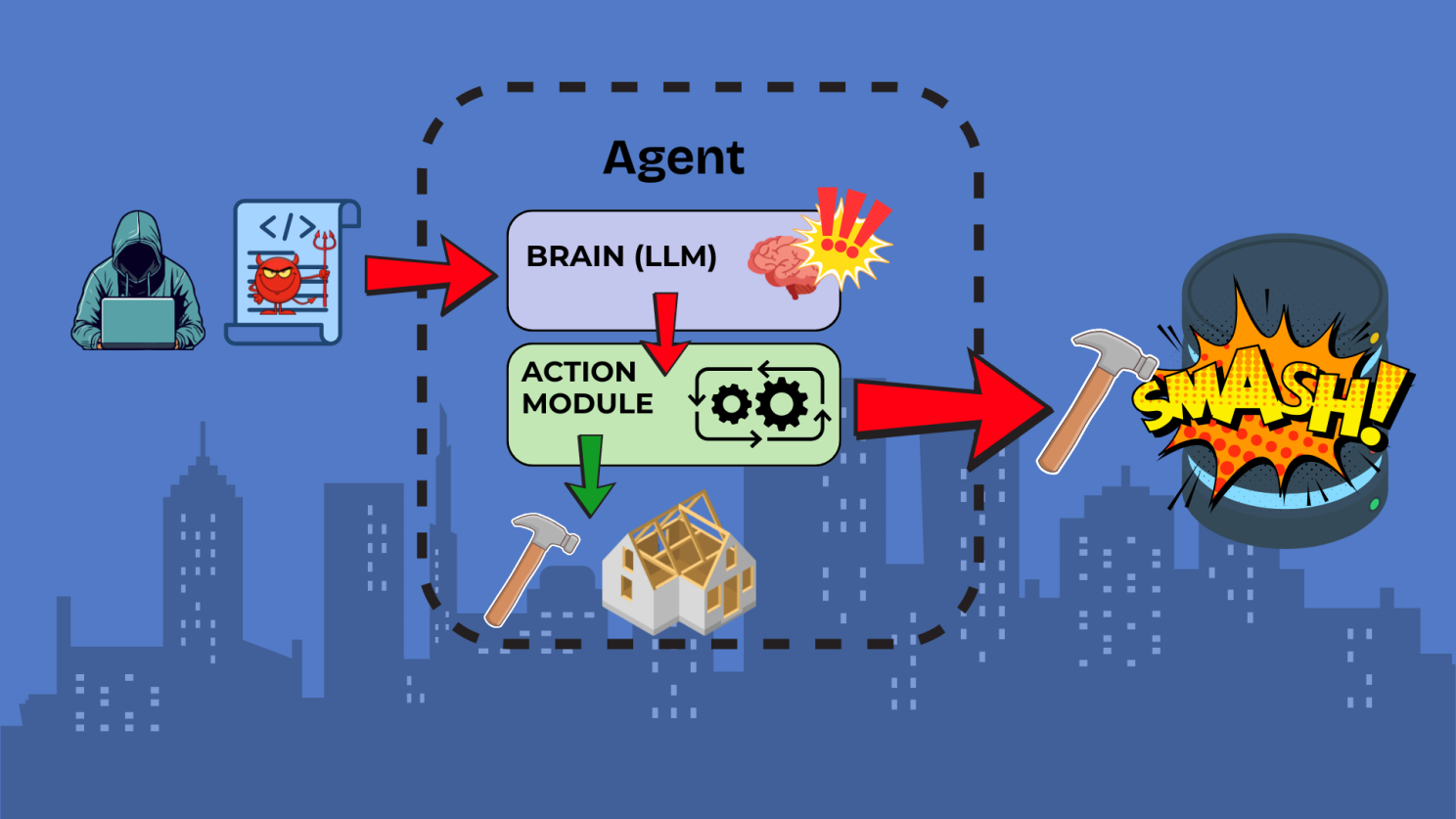

3. The Tool Escalation Scenario:

3. The Tool Escalation Scenario:

- An attacker manipulates the agent into using an authorised tool in an unintended way.

- This creates access to additional capabilities beyond the original security boundaries.

- These new capabilities are then used to execute actions that bypass existing security controls.

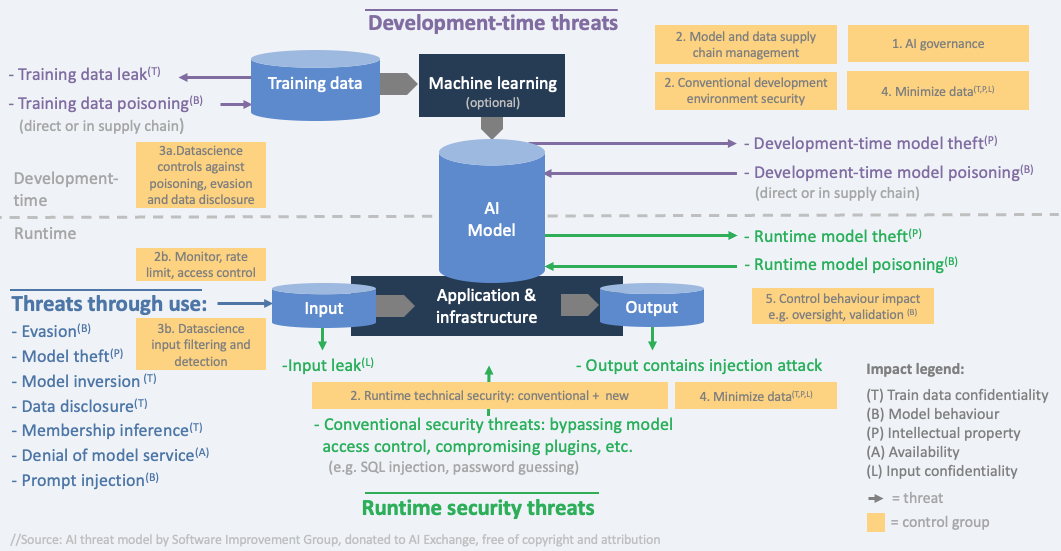

The evolving threat landscape presents additional challenges as AI agents gain new capabilities:

- Dynamic Tool Creation: As agents develop the ability to create their own tools, security teams must address the challenge of evaluating tools that didn't exist during initial security assessments.

- Multi-agent Interactions: When multiple agents work together, security vulnerabilities can propagate across the system, potentially creating new attack vectors that wouldn't exist with isolated agents.

- Emergent Behaviours: Advanced agents may develop emergent behaviours not anticipated by their designers, creating security implications that are difficult to predict and mitigate in advance.

These practical implications highlight the need for security approaches specifically designed for the unique challenges presented by AI agents, moving beyond traditional application security paradigms.

Security Principles for AI Agents

While AI agents present novel security challenges, many traditional security principles can be adapted and applied to this new context. Here's how to apply security fundamentals to the unique architecture of AI agents:

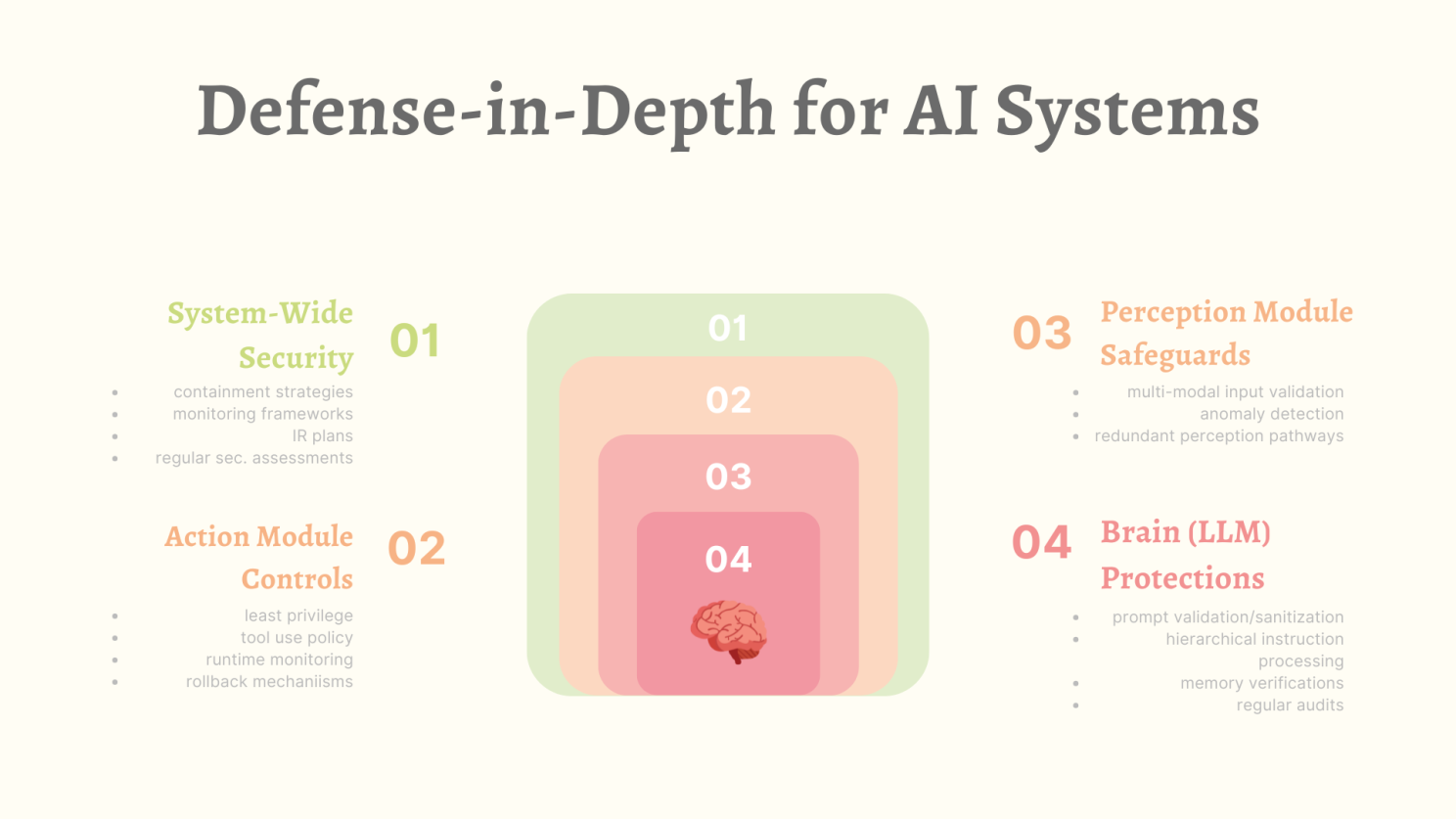

Defense-in-Depth for AI Systems

-

Component-Specific Mitigations:

- Brain (LLM) Protections:

- Implement robust prompt validation and sanitization.

- Develop hierarchical instruction processing with clear priority frameworks.

- Create memory integrity verification mechanisms.

- Regularly audit and clear sensitive information from agent memory.

- Perception Module Safeguards:

- Deploy multi-modal input validation across all supported formats.

- Implement anomaly detection for unusual input patterns.

- Create redundant perception pathways for critical inputs.

- Regular testing with adversarial examples to improve robustness.

- Action Module Controls:

- Implement principle of least privilege for tool access.

- Create tool and API usage policies specific to agent capabilities.

- Deploy runtime monitoring for unusual or potentially harmful actions.

- Develop rollback mechanisms for all agent-initiated changes.

- Brain (LLM) Protections:

-

System-Wide Security Measures:

- Containment Strategies: Sandbox environments where agents can be tested before deployment.

- Monitoring Frameworks: Specialised logging and monitoring designed for AI agent activities.

- Incident Response Plans: Procedures specifically designed for AI agent compromise scenarios.

- Regular Security Assessments: Continuous testing with specialised focus on AI-specific vulnerabilities.

Applying Zero Trust Principles to AI Agents

Taking a page out of what's recommended in the NIST AI Risk Management Framework, applying zero trust architecture to AI systems provides a strong foundation for agent security:

- Never Trust, Always Verify: Treat the agent's components as potentially compromised and verify all transfers between components.

- Least Privilege Access: Limit each agent's access to only the tools and data necessary for its specific function.

- Continuous Authentication: Regularly validate that the agent is operating as expected through behavioral analysis.

- Micro-segmentation: Create boundaries between different agent functions and the systems they interact with.

Developing AI-Specific Security Controls

- Prompt Engineering Security: Creating prompts with security in mind, including built-in guardrails and instruction validation.

- Alignment Techniques: Using techniques like RLHF (Reinforcement Learning from Human Feedback) to improve agent security posture.

- Agent Supervision Models: Creating overseer agents specifically designed to monitor and validate the actions of operational agents.

- Security-Focused Fine-tuning: Additional training specifically designed to reduce vulnerability to known attack vectors.

The application of these security principles requires a collaborative approach between AI development teams and security professionals, with security considerations integrated throughout the agent development lifecycle rather than applied as an afterthought.

Conclusion: Securing the Future of AI Agents

As we conclude our exploration of agentic AI security, it's clear that these systems require both adaptation of traditional security principles and development of entirely new approaches. The interconnected nature of AI agent components creates complex security challenges that will continue to evolve as agent capabilities advance.

Key Takeaways from This Series:

- Part 1: AI agents represent autonomous systems with unique security implications

- Part 2: Three evolutionary shifts have fundamentally altered the AI security landscape

- Part 3: Each agent component introduces specific vulnerabilities that can cascade through systems

- Part 4 (this post): Practical defense strategies require both traditional principles and AI-specific controls

Moving Forward

For security professionals facing the immediate challenge of securing AI agents in their environments, the most important first step is developing a comprehensive understanding of how these systems function and the specific vulnerabilities they introduce. This understanding forms the foundation for effective security strategies.

The security landscape will continue evolving as AI agents become more sophisticated. Staying informed about emerging threats, maintaining strong fundamentals, and adapting security practices will be essential for protecting against both current and future risks.

Continue the Discussion

This concludes our four-part exploration of agentic AI security. If you're implementing AI agents in your organization or have insights about securing these systems, I'd love to hear about your experiences and challenges.

Series Navigation:

- Part 1: The Rise of Agentic AI - A Security Perspective

- Part 2: Evolution - Three Critical Shifts in the AI Security Landscape

- Part 3: The Anatomy of AI Agents - Security Vulnerabilities

- Part 4: Practical Security Implications (this post)

Questions about AI agent security? Reach out via LinkedIn or email.

Further Reading/References

- OWASP Top 10 for Large Language Model Applications - Critical reference for standardized security vulnerabilities in LLM applications

- NIST AI Risk Management Framework - Comprehensive framework for AI risk assessment and management

- Targeting the Core: A Simple and Effective Method to Attack RAG-based Agents via Direct LLM Manipulation - Research on vulnerabilities in retrieval-augmented generation systems

- LLM-to-LLM Prompt Infection Research - Exploration of how malicious prompts can propagate between language models

- Anthropic's AI Safety Research - Industry research on alignment and safety in advanced AI systems

- Goodfellow et al. - Explaining and Harnessing Adversarial Examples - Seminal research on adversarial attacks in machine learning systems

- Dolphin Attack: Inaudible Voice Commands - Research demonstrating audio-based attacks on voice recognition systems

- Microsoft's AI Security Assessment Framework - Framework for evaluating and enhancing AI system security